Describe the Gradient Boosting Algorithm and Its Use Cases

There is a lot of textual information that requires management and analysis but. Adaboost and gradient boosting are types of ensemble techniques applied in machine learning to enhance the efficacy of week learners.

What Is Xgboost Data Science Nvidia Glossary

However instead of assigning different weights to the classifiers after every iteration this method fits the new model to new residuals of the previous prediction and then minimizes the loss when adding the latest prediction.

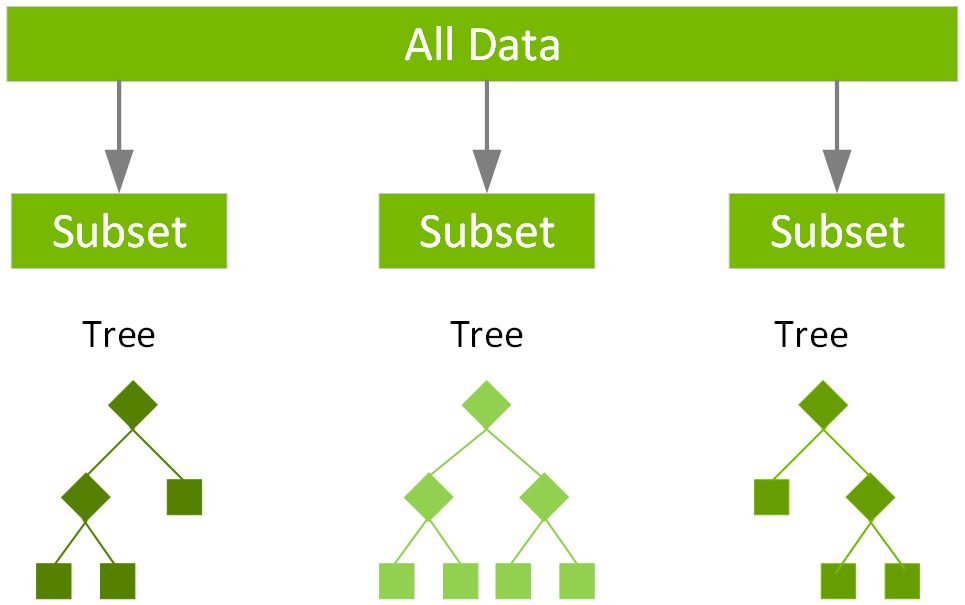

. It continues to be one of the most successful ML techniques in Kaggle comps and is widely used in practice across a variety of use cases. In this paper we describe the original boosting algorithm and summarize boosting methods for regression. The concept of boosting algorithm is to crack predictors successively where every subsequent model tries to fix the flaws of its predecessor.

XGBoost is easier to work with as its transparent allows the easy plotting of trees and has no integral categorical features encoding. Models of a kind are. Boosting combines many simple models into a single composite one.

We present our method and provide a simple proof that. Gradient boosting machines like XGBoost LightGBM and CatBoost are the go-to machine learning algorithms for training on tabular data. The process of learning continues until all the N trees.

Understanding Gradient Boosting. An inner product space X containing functions mapping from X to some set Y. Gradient boosting classifier is a set of machine learning algorithms that include several weaker models to combine them into a strong big one with highly predictive output.

Convergence trends in different variants of Gradient Descents. Gradient boosting is very popular and widely used in the field of ML. Gradient boosting is a type of machine learning boosting.

Gradient boosting is a machine learning boosting type. If the cost function is convex then it converges to a global minimum and if the cost function is not convex then it converges to a local minimum. Gradient Tree Boosting.

Scientists can use Gradient Boosting can for Sentiment Analysis which plays a significant role in text classification. The main idea is to establish target outcomes for this upcoming model to minimize errors. The key idea is to set the target outcomes for this next model in order to minimize the error.

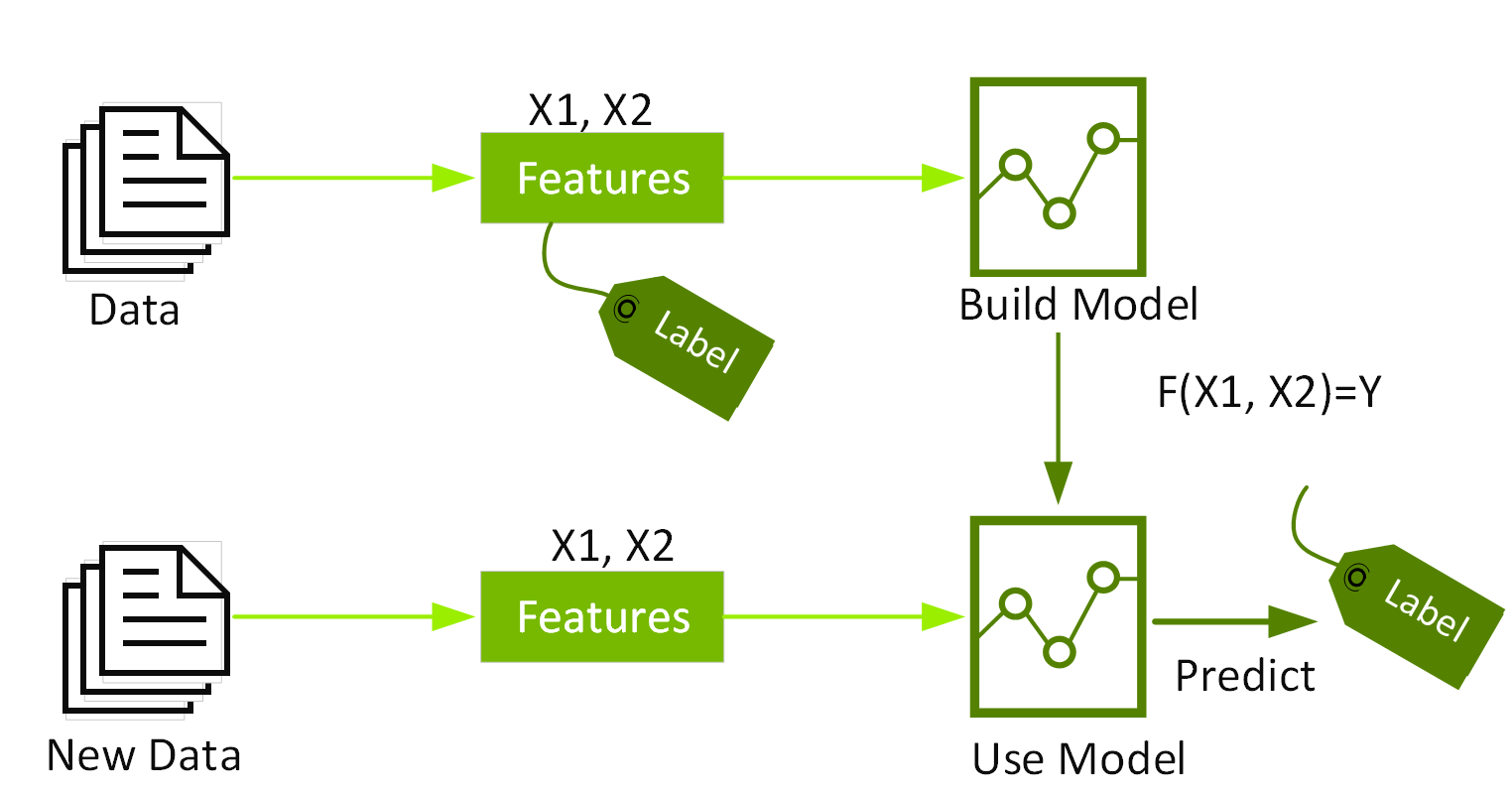

In case of Batch Gradient Descent the algorithm follows a straight path towards the minimum. It is a boosting technique which can be applied with classification or regression problem. The base learner or predictor of every Gradient Boosting Algorithm is Classification and Regression Trees.

Lin F -- III A weak learner CF that accepts F E lin F and returns I E F with a large value of -lCF f. The base learners are. Boosting is a method for creating.

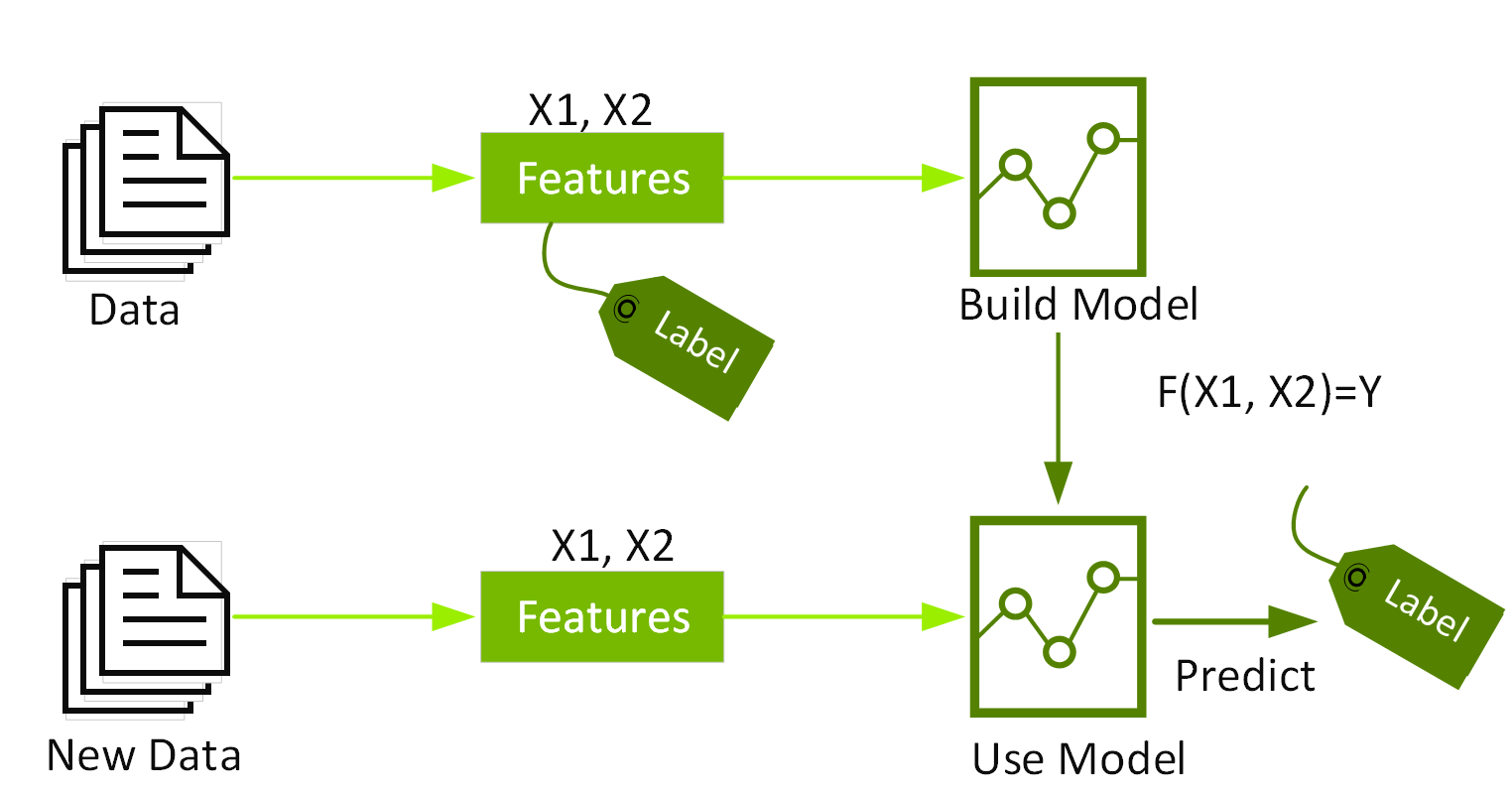

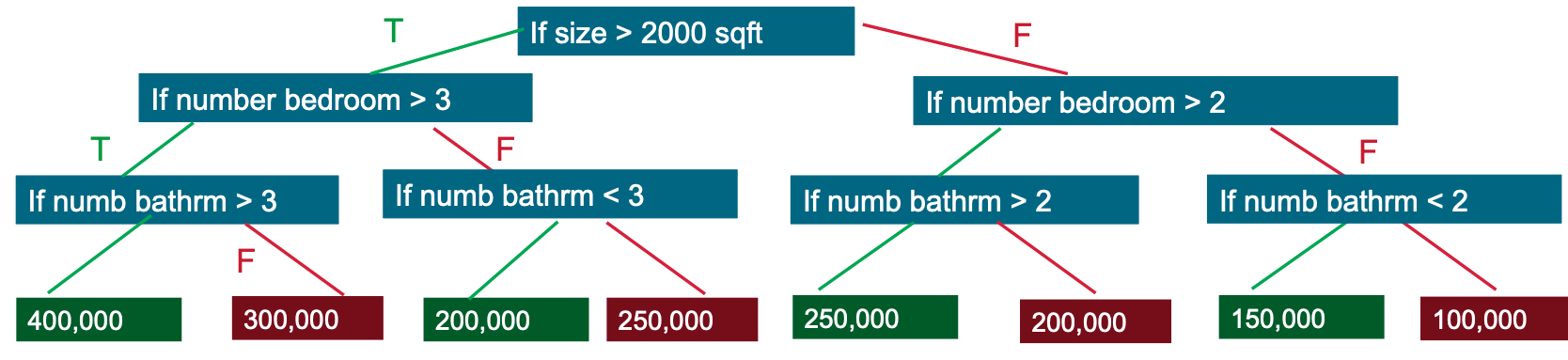

Gradient boosting trains models in a sequential manner and involves the following steps. It relies on the intuition that the best possible next model when combined with previous models minimizes the overall prediction error. Gradient boosting is an ensemble method that sequentially adds our trained predictors and assigns them a weight.

Gradient Boosting being a greedy algorithm can easily overfit a dataset. We propose a new boosting algorithm for regression prob lems also derived from a central objective function which retains these properties. Over the years gradient boosting has found applications across various technical fields.

In such cases regularization can be use that penalize different parts of the algorithm and typically increase algorithm efficiency by reducing overfitting. It strongly relies on the prediction that the next model will reduce prediction errors when blended with previous ones. Gradient boosting is a general method used to build sequences of increasingly complex additive models where are very simple models called base learners and is a starting model eg a model that predicts that is equal to a constant.

In Gradient Boosting Algorithm every instance of the predictor learns from its previous instances error ie. It corrects the error reported or caused by the previous predictor to have a better model with less amount of error rate. Do a quick online research to find a case study and use it to describe howwhere the gradient boosting GB modeling method is applied.

Gradient Boosting is an iterative functional gradient algorithm ie an algorithm which minimizes a loss function by iteratively choosing a function that points towards the negative gradient. A class of base classifiers F X. Most people who work in data science and machine learning will know that gradient boosting is one of the most powerful and effective algorithms out there.

Fitting a model to the data. No one of the weak persons is able to. Gradient boosting is similar to lifting a heavy metal a number of stairs by multiple weak persons.

A differentiable cost functional C. Gradient Boosting in Classification.

What Is Xgboost Data Science Nvidia Glossary

No comments for "Describe the Gradient Boosting Algorithm and Its Use Cases"

Post a Comment